The Professional Dataset Consistency Report reveals critical inconsistencies across six identified datasets. These discrepancies highlight potential inaccuracies and variations in data categorization. Such issues undermine the reliability of the datasets. Understanding the methodologies used to assess these inconsistencies is essential. The implications of these findings could significantly impact strategic decision-making. Exploring the recommendations for improvement will provide further insights into enhancing data integrity and fostering trust in these datasets.

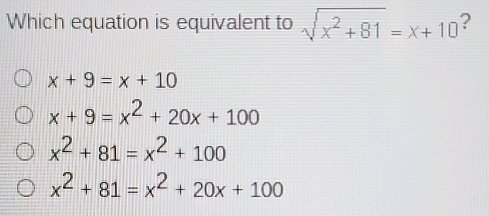

Methodologies for Assessing Dataset Consistency

When evaluating dataset consistency, researchers employ a variety of methodologies that systematically analyze the integrity and reliability of data.

Key approaches include data validation techniques and consistency checks, which ensure that datasets adhere to predefined standards.

Key Discrepancies Identified

Following the application of various methodologies for assessing dataset consistency, several key discrepancies have been identified that warrant further examination.

Significant variances in data validation processes emerged, underscoring potential inaccuracies in recorded entries.

Additionally, an error analysis revealed inconsistencies in data formatting and categorization across the datasets, indicating a need for enhanced protocols to ensure the integrity and reliability of the information presented.

Data Integrity Issues and Implications

Although data integrity is often assumed to be a foundational element of trustworthy datasets, recent findings highlight significant issues that can compromise the validity of the information.

Data validation processes have proven inadequate, leading to frequent discrepancies.

Error analysis reveals patterns that suggest systemic flaws, ultimately undermining the reliability of conclusions drawn from these datasets, thereby affecting decision-making and strategic planning efforts.

Recommendations for Improvement

To address the identified data integrity issues, a series of targeted recommendations can be implemented to enhance the reliability of datasets.

These include establishing rigorous data validation protocols to ensure accuracy and consistency, alongside systematic error correction procedures to rectify discrepancies.

Implementing these measures will foster a culture of continuous improvement, ultimately empowering stakeholders to trust and utilize the datasets effectively.

Conclusion

In summation, the evaluation of the identified datasets reveals a landscape marred by inconsistencies and inaccuracies, akin to a tapestry frayed at the seams. The findings underscore the urgent need for robust data validation and error correction mechanisms. By weaving a framework of reliability into the fabric of these datasets, stakeholders can cultivate an environment of trust, ensuring that strategic decisions are anchored in the bedrock of accurate and consistent information.