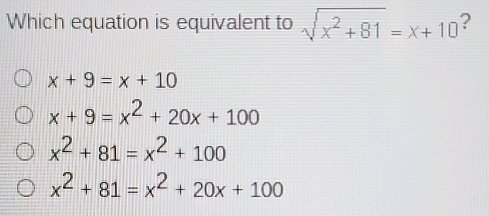

The cross-system data validation of identifiers 1314403445, 8338290955, 913239067, 603111684, 958803632, and 120706141 uncovered notable inconsistencies across multiple datasets. Utilizing methods such as cross-referencing and consistency checks, significant discrepancies in data accuracy emerged. These findings raise critical questions about the current data governance practices in place. Understanding the implications of these discrepancies is essential for ensuring data integrity and reliability across systems.

Overview of Critical Identifiers

Critical identifiers serve as essential elements in cross-system data validation, acting as unique markers that facilitate the accurate correlation and verification of data across disparate platforms.

Various data types and identifier formats are utilized to ensure consistency and reliability. By establishing these identifiers, organizations can navigate complex data landscapes, promoting data integrity and enabling seamless interoperability among systems while preserving the autonomy of individual data sets.

Methodology for Data Validation

Establishing a robust methodology for data validation is paramount in ensuring the accuracy and reliability of information across systems.

This process involves identifying diverse data sources and applying effective validation techniques, such as cross-referencing, consistency checks, and anomaly detection.

Findings and Discrepancies

Findings and discrepancies from the data validation process reveal significant insights into the integrity of information across systems.

Notably, variations in data accuracy emerged, highlighting the effectiveness of diverse validation techniques employed. Certain datasets exhibited inconsistencies, prompting further examination of underlying processes.

Such discrepancies emphasize the need for ongoing scrutiny to maintain the reliability of cross-system information and support informed decision-making.

Recommendations for Data Integrity

Ensuring robust data integrity requires a multifaceted approach that encompasses rigorous validation protocols, regular audits, and comprehensive training for personnel involved in data management.

Effective data governance must be prioritized, establishing clear policies and accountability structures.

Additionally, implementing quality assurance measures will further enhance data reliability, minimizing discrepancies across systems and fostering a culture of continuous improvement in data accuracy and compliance.

Conclusion

In conclusion, the cross-system data validation of the specified identifiers revealed a tangled web of discrepancies that threaten data integrity. This underscores the necessity for robust validation protocols, akin to a lighthouse guiding ships through turbulent waters. By implementing stringent data governance and quality assurance measures, organizations can enhance reliability and mitigate inconsistencies, ensuring that their information remains a solid foundation upon which informed decisions can be built. Continued vigilance is essential in maintaining the integrity of data systems.